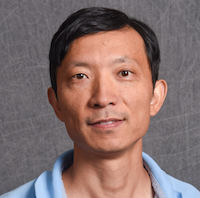

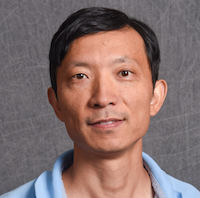

Dr. Xipeng Shen

("xi" pronun. like 'see'.)

("xi" pronun. like 'see'.)

is a full professor in Computer Science and the Director of High Performance Intelligent Computing at North Carolina State University (NCSU). He joined NCSU in August 2014 as a Chancellor’s Faculty Excellence Program cluster hire as a tenured associate professor. Before that, he was an Adina Allen Term Distinguished Associate Professor at the College of William and Mary. He received his Ph.D. from University of Rochester in 2006. He leads the PICTure research group.

lies in the broad fields of Programming Systems and Machine Learning, with an emphasis on enabling extreme-scale data-intensive computing and intelligent computing through innovations in compilers,runtime systems, and Machine Learning algorithms. As a pioneer in manycore and GPU code compilation and optimizations, his research has influenced the development of modern heterogeneous programming systems and machine learning systems. His contributions to the field have been recognized through

awards such as the DOE Early Career Award, NSF CAREER Award, Google

Faculty Research Award, and IBM CAS Faculty Fellow Award, alongside

the NCSU University Faculty Scholars Award. His research has been sponsored by NSF, DOE, and NIH. He is an ACM

Distinguished Member, ACM Distinguished Speaker, and a senior member of

IEEE.

In addition to his academic pursuits, Dr. Shen co-founded CoCoPIE Inc., a company dedicated to enhancing AI deployment. He has been a consultant or member of the advisory boards to several leading IT companies, including Intel, Microsoft, Huawei, Cisco, Meta.